The Next Step in Agentic AI: Accountability

The best AI agents don't just automate tasks - they earn trust through transparency and accountability. Here are five prerequisites that turn Agentic AI from a promising technology into a reliable teammate your organization can depend on.

.png)

Only a few years ago, LLMs capable of writing several coherent paragraphs were a revelation. A few versions later, accurate text generation became the norm, and concerns started to focus on groundedness: whether the generated text corresponded with facts.

Today, as we use LLMs in AI agents able not only to generate texts but to take actions, ensuring groundedness becomes even more critical, and progress is being made almost every day. But a new challenge is emerging: accountability.

Unlike humans, AI agents lack the social context that governs human interaction. When we converse with people, we naturally trust them based on social cues and implicit commitments. When someone makes a significant error, we expect them to feel embarrassed and experience social pressure to correct their mistake and prevent recurrence. When working with people, we can be confident (in most cases) they understand their actions have real-world consequences. This isn't true for LLMs. You can accuse them of anything - and they will generate a heartfelt, well-phrased apology that often has zero influence on future behavior. They cannot worry about the outcomes of their actions or reputational damage. Sometimes this is a strength, but for trust-building, it's a critical weakness.

So how do we build trust in AI agents handling important tasks?

One could argue that AI agents just need to become much smarter and never make mistakes, that we should reduce hallucinations to zero, and make them attacker-proof. I argue that this is both too much and too little to ask:

Too little, because trust runs deeper than agents just not working well enough. Think about self-driving cars, for example: even if they match or even exceed human driving abilities, questions of trust, legal accountability and handling unpredictable situations are still holding back adoption. Trusting AI Agents is a psychological and methodological shift that’s hard enough to expect, even if the AI Agents are performing incredibly.

Too much, because I think we can achieve trust without waiting for AI Agents to be perfect.

What we need from the agents is accountability, not perfection. We want to know that when we assign a task to an agent, they will do what it takes to get it done, or make sure we know they can’t. We want them to feel like things we can trust, at least at certain environments. This can be achieved even if they are not perfect at doing their job, just like humans are not perfect 100% of the time. In this blogpost, I would like to try and outline what this accountability entails for AI Agents, and how we can achieve it. This won't immediately qualify AI Agents to handle our nuclear weapon codes right away, but it establishes the foundation of trust needed to expand their responsibilities over time.

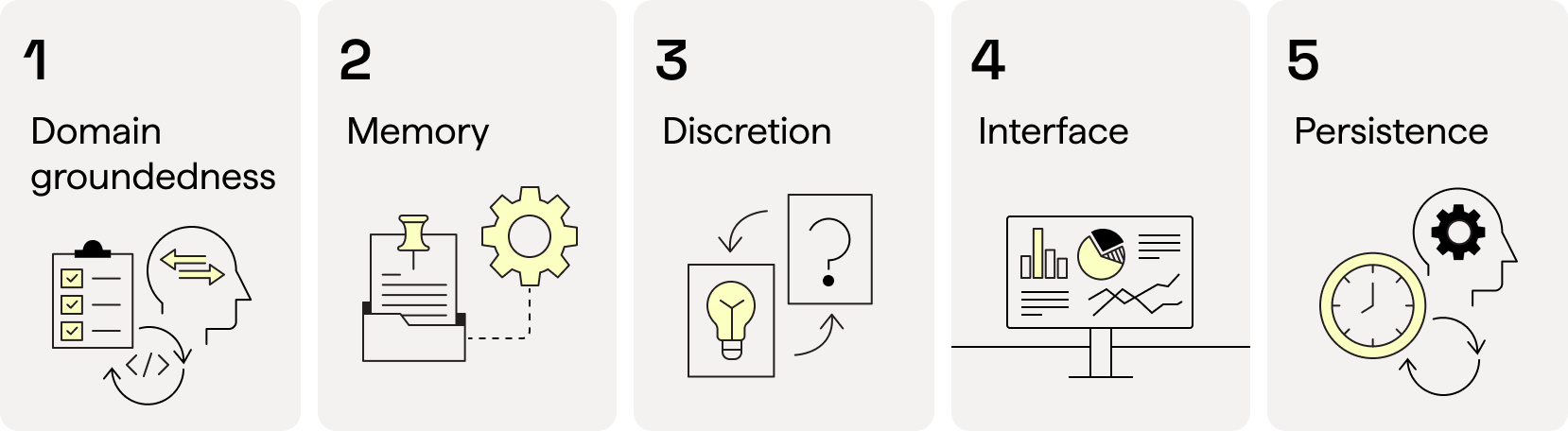

5 Prerequisites for Accountable AI Agents

1. Domain-Groundedness

As mentioned, groundedness is still a hard problem for LLMs, as evidenced by the relatively late stage at which it was addressed. But for agents to have accountability, we need them to be grounded in reality. We need to be able to feel that they know what they're talking about; that they understand.

I believe groundedness can be achieved at a system level, even without making LLMs fully grounded in reality. A concession is made here: accountability might be limited to a specific domain.

For domain-specific accountability, domain-groundedness is enough. For example, it’s easy to trust an agent to write code when you have full test coverage and a pipeline that verifies its results. If it writes text, it’s easier to trust it if you require citations and have a process for checking them. Simply put - you can implement external tools to ground it, even if it doesn’t work out of the box.

Accountability requires a holistic system that can verify and test your AI Agents' outputs and provide them with the groundedness they currently lack.

2. Memory

When you train a human employee, they will invariably make mistakes and take inititive and learn things they don’t understand. When this happens, you can count on them to remember these things for next time.

With LLMs, this is more complex. Since AI agents are usually stateless, all their memory should be provided to them through system prompts, RAGs, or tools. When you give feedback to an agent, it's hard to gain confidence that the AI Agent will remember and apply this feedback next time. It will simply be written to memory (hopefully) - and may or may not be retrieved when relevant again.

Accountable AI Agents need to possess a robust memory paradigm. Users should be able to explain to their agents what’s crucial and what’s minor, and help them create stronger associations between each memory item and the hints that should evoke it, similar to what we expect from humans.

3. Discretion

A close relative of the hallucination problem is a lack of discretion. When you ask a developer to implement a feature, for example, they will automatically apply common sense. They will adjust the solution to the problem's importance and size, as they see it.

In a healthy work culture, they will push back against changes that are overly complex relative to their customer value, providing important feedback to the product owner.

People will also understand cues from whoever assigns them tasks to gauge the importance and urgency of the task, and adjust accordingly. How fast do we want the job done? How central is it to our product? Who will use it? How much risk can we take? These are all questions that translate to the feature’s soft requirements, which are seldom made explicit.

AI Agents don’t excel at this out of the box. They are often yeasayers who don’t ask many questions about the tasks they are assigned, and certainly don’t challenge them. When this is the case, much like a human employee who blindly follows instructions, they will ultimately lack accountability for their actions, making them harder to trust.

Accountable AI Agents will exercise discretion, so they can later explain why they did what they did, completing the circle of accountable task resolution.

4. Interface

Humans know how to talk to other humans (in most cases...). Approaching others has an intuitive 'interface', more so than chatting with a faceless entity.

One challenge in assigning tasks to an AI agent is that you can’t really see what it does. It’s hard to get the confidence that it really understands you and works as expected. Combine that with hallucinations, and suddenly you’re not even sure what it means when it gleefully says it’s 'done'.

In fact, often the easiest way to 'break' an AI Agent is to talk about its interface, which is totally transparent to the AI Agent and central to the user. It’s as if you had an employee you communicate with over the phone, but they don’t understand what 'phone' even is. Worse: it pretends to understand.

Accountable AI Agents will need to work with an intuitive, human-friendly interface. It could be a simple matter of a GUI that matches the task at hand, or a comprehensive suite of visibility tools that make its actions observable.

5. Persistence

When we give a task to a person, our expectations of them exceed the time of the actual execution of it. We might want them to follow up on tasks after they are completed, to remind us of previous tasks that may have been forgotten, to understand and challenge new information in light of a previous task. They can even come up with ideas about their task while not working on it.

AI Agents don’t exist when you don’t run them. It means that someone has to 'wake them up' if they have an error while working on a task, someone needs to inform them if there’s an issue with their previous task, and so on. This undermines accountability. An AI Agent can't be accountable unless it is given the ability to continuously check its actions and verify whether they achieved their goals. To be meaningfully accountable, agents need to have persistence.

Got Questions? We've Got Answers!

If you don't find the answer you're looking for here, feel free to reach out to us here.

Ready to maximize your cyber team’s efficiency with our first Digital Employee, Alex?