What if access permissions could manage themselves, granted or revoked in real time, without human bottlenecks?

It sounds futuristic, but it’s already here. Agentic AI brings reasoning and autonomy to cybersecurity, especially Identity and Access Management (IAM), where it can offload tedious manual processes. Yet before trusting AI to make access decisions, it's important to understand its capabilities and how to establish trust.

How To Build Trust with Agentic AI?

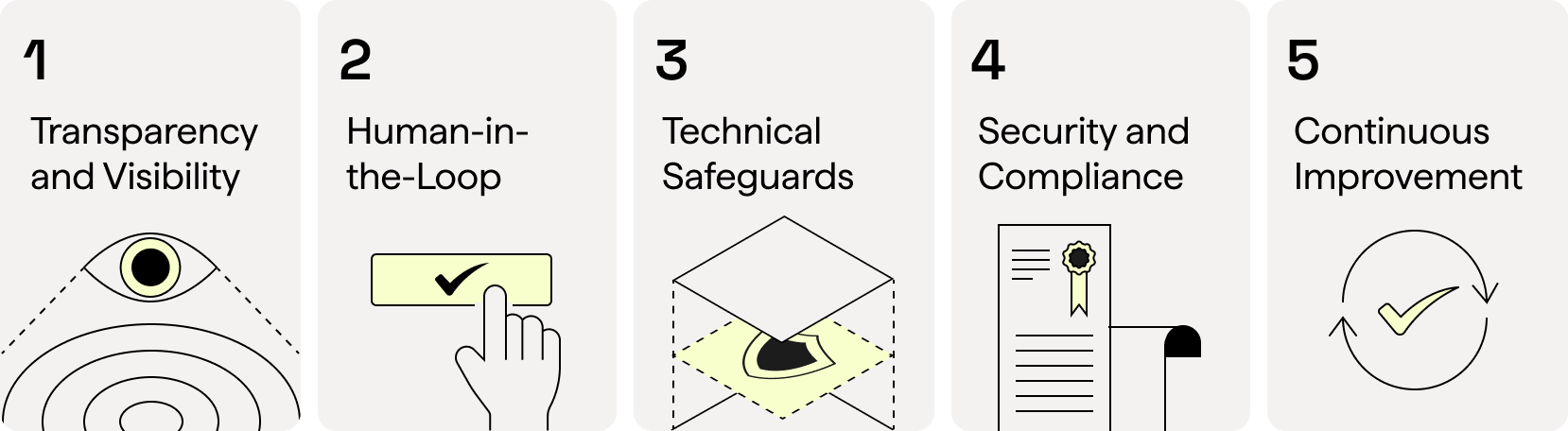

As AI agents become more capable of handling complex business tasks, a critical challenge emerges: how do you convince customers to trust an artificial intelligence with their most important processes? Forward-thinking startups are pioneering innovative approaches to build this trust, moving beyond flashy demos to create genuine confidence in AI-powered solutions. Here are 5 trust-building strategies:

1) Transparency and Explainablility:

Great AI agents don't just deliver results, they show you how they work. Agentic AI systems must be able to explain their own decisions. When agentic systems can explain themselves, they become easier to audit, easier to govern, and easier to trust. According to Deloitte’s 2024 State of AI report, 44 percent of enterprise leaders plan to invest in explainability over the next year.

With enhanced transpaernacy mechanisms, customers can peer into the agent's decision-making process, examining the reasoning chains that led to specific recommendations, and watch real-time progress.

When code is being generated by an AI agent, rather than simply presenting finished code, a transparent agent reveals the process and allow customers to inspect: which patterns they considered, why they rejected certain approaches, and how they validated their solutions.

This openness extends to planning phases as well. Customers can see how AI agents break down complex projects into manageable steps, understand the dependencies between tasks, and even observe when the system encounters uncertainty and seeks additional guidance.

2) Human-in-the-Loop (HITL):

Humans provide critical judgment when making security decisions and offer valuable feedback to improve and train ML models. AI agents enhance human decision-making rather than replacing it entirely. Therefore, they should keep humans in the loop at crucial moments and critical decisions, while allowing AI to handle routine execution. Customers should be able to define which decisions require human sign-off based on risk levels, financial thresholds, or strategic importance. Customers should maintain override capabilities over Agentic AI recommendations, and configure its workflows.

This collaborative approach serves a dual purpose: it builds confidence by maintaining human control, while gradually demonstrating the AI's reliability. As customers witness consistent performance over time, they naturally become comfortable delegating more responsibility to their AI agents.

3) Technical Safeguards:

Behind the scenes, it's important to implement multiple layers of technical safeguards that work together to ensure consistent, reliable performance. These aren't single-point solutions, but rather comprehensive systems designed to catch and correct errors before they impact customers.

Multi-model consensus mechanisms represent one such innovation. Rather than relying on a single AI model, these systems employ multiple models that independently analyze problems and cross-validate solutions. When models disagree, the system flags the decision for human review, significantly reducing the likelihood of errors reaching customers.

Constraint layers add another dimension of protection, embedding rules and requirements directly into the AI's decision-making process. These guardrails ensure that AI agents cannot make recommendations that violate company policies, regulatory requirements, or industry best practices, regardless of what they might consider optimal from a purely algorithmic perspective.

An additional safeguard is to tackle the hallucination problem head-on through sophisticated validation systems. Rather than simply hoping their models won't generate false information, it's a good idea to build verification mechanisms that cross-reference outputs against reliable sources and flag potentially inaccurate content before it reaches customers.

4) Security and Compliance:

Trust in AI agents extends far beyond their decision-making capabilities to encompass fundamental questions of data security and regulatory compliance. Implementing enterprise-grade security measures from day one is crucial, including end-to-end data encryption, role-based access controls that ensure users only see information relevant to their responsibilities, comprehensive audit trails that track every interaction between humans and AI agents, as well as adherence to industry-specific standards (GDPR, SOC 2, etc.) to signal to customers that AI agents meet the same security and privacy standards they expect from traditional enterprise software.

5) Continuous Improvement:

The most sophisticated approach to building customer trust involves creating systems that demonstrably improve over time through structured feedback loops and performance measurement. These systems actively solicit feedback from users, not just on final outcomes but on intermediate steps and reasoning processes. This feedback feeds back into model training and system refinement, creating visible improvements that customers can observe and measure against their own business objectives. Customers can see how their AI agents are affecting key performance indicators, whether that's reducing processing time, improving accuracy rates, or identifying opportunities that human analysts might miss. Just like with human employees, customers start with high oversight and manual approval requirements, then progressively allow more automated decision-making as confidence builds.

Want to Remove the IAM Weight Off of Your Security Team’s Back?

Introducing Alex, Twine’s first AI digital employee, who learns, understands, and takes away the burden of identity management tasks to proactively complete your organization’s cyber objectives.

The problem with today’s identity and access management (IAM) tools is that they generate a lot more work than initially expected: setup, deployment, as well as ongoing upkeep. Legacy systems are excessively complicated, require highly skilled operators, and do not ensure thorough deprovisioning. This results in residual traces, orphaned accounts, and over-privileged accounts.

Before Alex, no technology has been able to fully replicate human capabilities in the IAM cybersecurity vertical - until now.

With Alex, finally cybersecurity teams are equipped with a high-performing digital employee who joins the team and autonomously executes IAM tasks as directed, from A to Z.

Onboard Alex to maximize cyber efficiency and get the most out of your existing Identity toolset.